2 min read

Technical SEO Tips to Boost Your BFCM Sales

Increase your Black Friday and Cyber Monday sales with these crucial technical SEO tips.

What is BERT? It’s Google’s biggest step forward in the last five years and a milestone for Search as a whole. But what does it actually do?

Google is the first port of call for many people when looking to learn about something. But a lot of the time, users might not know the perfect way to phrase their search query. They might misspell words, not know how to use certain terms or not know how to phrase their query for the best results.

People end up using search queries that they think Google will understand but seem unnatural to us. This is where BERT comes in. It’s their latest endeavour in giving users the best results, coming 5 months since the June core update.

Bidirectional Encoder Representations from Transformers (or BERT for short) is a system that focuses on processing words with the context of every other word in the sentence, rather than focusing on each word one at a time.

This means that in a search query, each word is looked at in relation to the words before and after, helping to better understand the intent of that search.

BERT is applied to 1 in 10 searches in order to offer better information.

Some types of queries that are affected:

Google provided us with some examples of BERT in action.

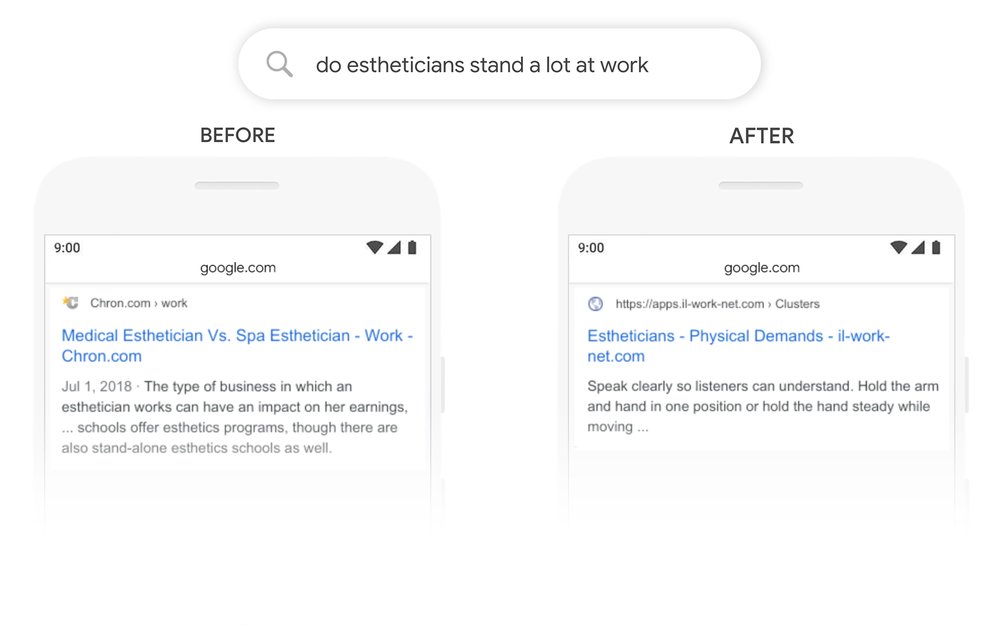

The image below shows the search term “do estheticians stand a lot at work”. Initially, the search term and the Google result were matched on a keyword basis. The keyword from the query “stand” was matched with “stand-alone”, resulting in some irrelevant content. But with BERT, Google understands that the word “stand” is related to physically standing.

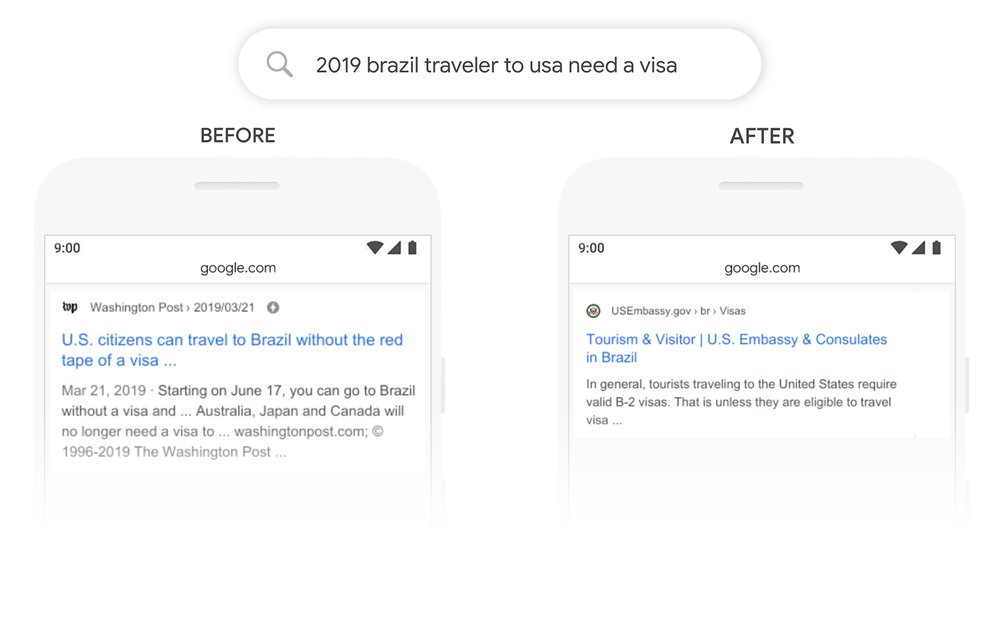

Another example shows how BERT better understands the query “2019 brazil traveler to usa need a visa”. In this search, someone is asking with a traveller from brazil will need a visa to get into the US. The first result from Google, pre-BERT, is information on US citizens travelling to Brazil. But thanks to BERT, Google now sees how the preposition ‘to’ affects the meaning of the sentence.

Multiple regions and languages are already seeing changes in featured snippets. We’ve seen a few improvements already for one of our clients with some new features snippets, so it’s definitely already working.

But let us stress this main point: you can’t optimise for BERT. It can’t be done.

There’s no technique you can use to make it work for you, or any new way of using keywords. This is just another step towards Google better understanding natural language use.

So all you can do is create quality content that satisfies a specific intent and you’ll be rewarded, just like before.

Keep up to date with the latest Search news on Twitter @HonchoSearch and our LinkedIn page. Drop us a comment if you’ve seen any changes due to BERT!

2 min read

Increase your Black Friday and Cyber Monday sales with these crucial technical SEO tips.

2 min read

Black Friday and Cyber Monday (BFCM) are just around the corner. Boost your content marketing strategy to drive traffic, engage customers, and...

5 min read

Discover how artificial intelligence is changing the way we create, curate, and optimise content across various platforms.