2 min read

What is Google Search Generative Experience? (SGE)

What is Google SGE? Think of Google SGE as your helpful buddy on the search results page. Instead of making you click on different websites, it pulls...

2 min read

Admin : Jan 21, 2021 9:55:26 AM

PRAW stands for “Python Reddit API Wrapper” and is an easy and fun module to start collecting data from Reddit. The official documentation can be found here:https://praw.readthedocs.io/en/latest/index.html.

Assuming you have a Reddit account already

Visit: https://www.reddit.com/prefs/apps

At the bottom, you will see “Create App” or similar depending on whether you have existing applications in your account.

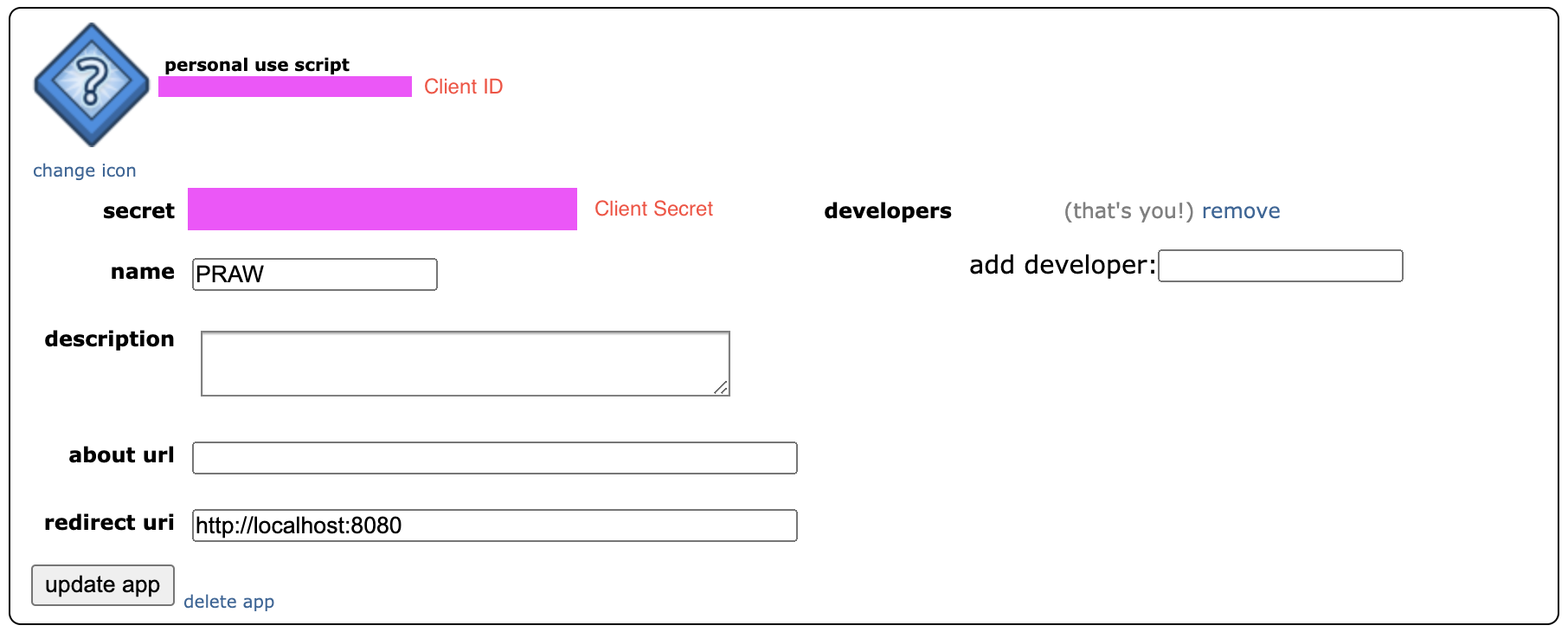

Note down your Client ID & Client Secret (image below where to find them).

Install PRAW in Python. PRAW supports Python 3.6+. The recommended way to install PRAW is via pip

pip install praw

You need an instance of the Reddit class to do anything with PRAW. The following will be looking at a “read only” submission instance. In basic terms allowing us to look at submissions in a subreddit as if you were browsing.

Create a new python file and, using the Client ID and Client Secret, enter your information. The user agent can be left as it is.

import praw

reddit = praw.Reddit(

client_id=”my client id”,

client_secret=”my client secret”,

user_agent=”my user agent” )

To test if your instance is working use:

print(reddit.read_only) # Output: True

Choose a subreddit that you want to get submission data for. For my example I’ll use r/pics – where everyone on LinkedIn and Twitter finds their “original” content.

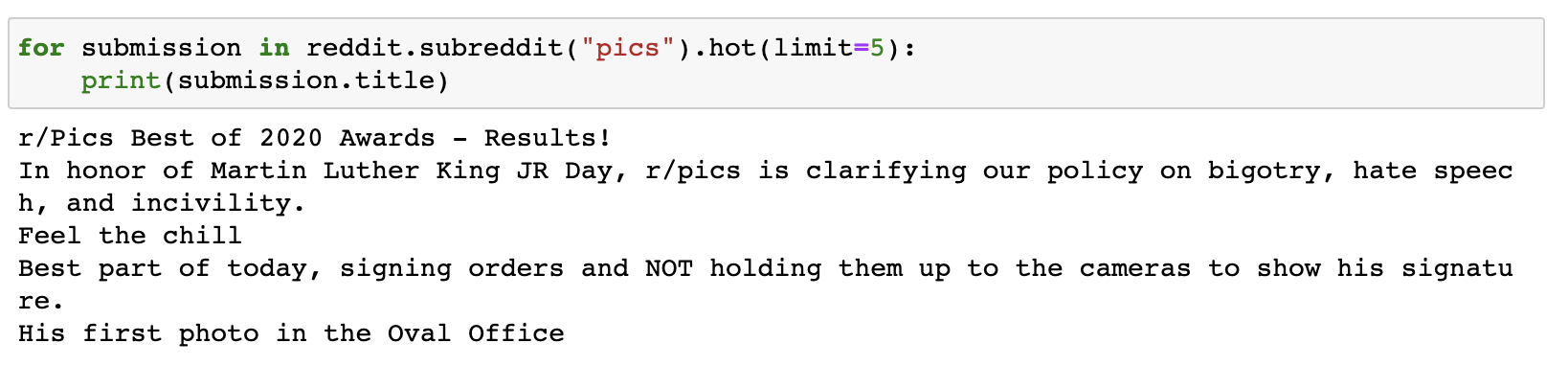

A quick, simple operation – print the submission titles for the top 10 hottest posts right now. In the same python file from above add:

for submission in reddit.subreddit(“learnpython”).hot(limit=10):

print(submission.title)

You should have the top 10 post tiles printed. As seen below:

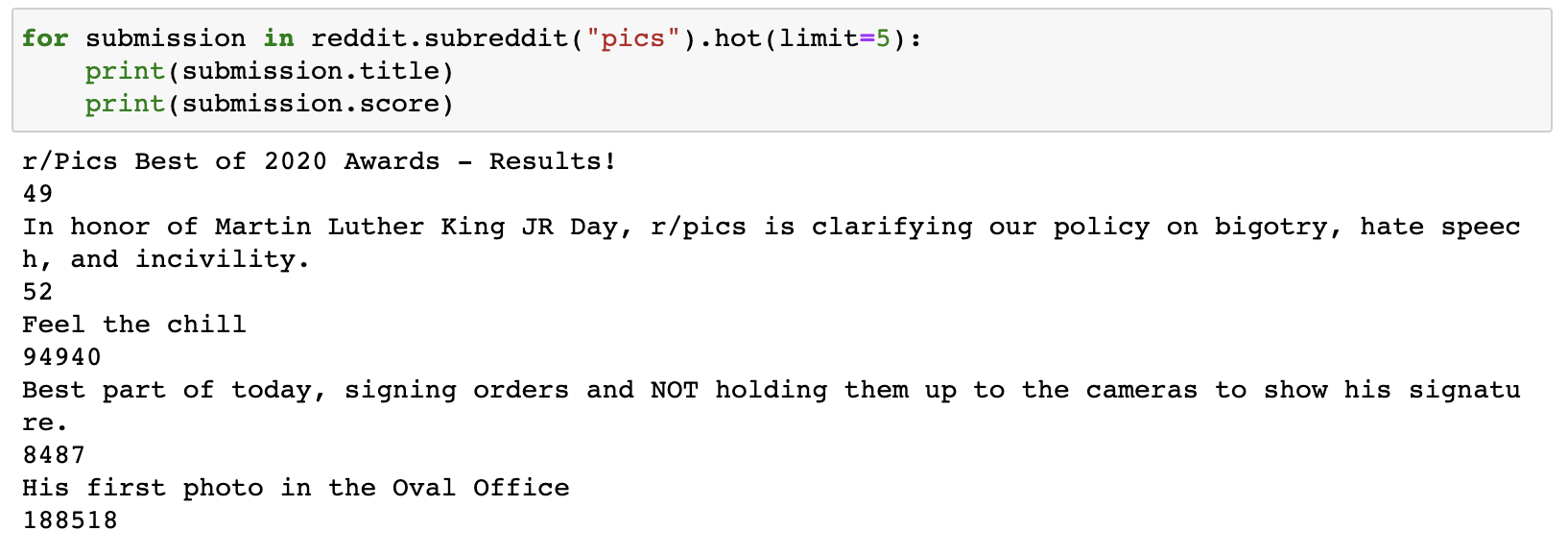

With PRAW we’re able to extract a lot more than just the title posts. Below is my table, I have included others which I typically use.

| Attribute |

Description |

| author |

Provides an instance of Redditor. |

| num_comments |

The number of comments on the submission. |

| score |

The number of upvotes for the submission. |

| title |

The title of the submission. |

| url |

The URL the submission links to, or the permalink if a self-post. |

Since attributes are dynamic , there is no a guarantee that attributes seen in my example or other examples will always be present, nor will any list ever really be 100% accurate. The best way to see all available attributes at any given time is to use the following:

import print

# assume you have a Reddit instance bound to variable `reddit`

submission = reddit.submission(id=”39zje0″)

print(submission.title) # to make it non-lazy

pprint.pprint(vars(submission))

Hopefully this has been an easy introduction to PRAW and using the Submission instance. While there is plenty more you can do from here, such as adding this all into a dataframe and using NLP to uncover sentiment and trends, we’ll leave that for another post.

If have any questions contact us at hello@honchosearch.com or find me on Linkedin.

Subscribe to our email list to receive blogs post and other Python How-Tos directly to your inbox.

2 min read

What is Google SGE? Think of Google SGE as your helpful buddy on the search results page. Instead of making you click on different websites, it pulls...

5 min read

Discover the power of high search volume keywords and how to effectively use them to boost your online presence and drive maximum impact.

2 min read

We're delighted to officially announce our partnership with Eflorist, one of the world’s leading flower delivery brands with over 54,000 local flower...

Python can be a great tool for Search Marketers allowing us to automate repetitive tasks and work with large data sets to analyse trends. This is...

Getting Views From Youtube Videos This guide will show you how to scrape video views with Python with a primary focus on either scraping a single...

In this article we’ll look at how we built a script in Python 3, using Pytrends to effectively automate data collection from Google Trends. Also...