3 min read

Honchō Scoops Up Two UK Search Awards!

It’s official, we've added not one, but two shiny trophies to our awards cabinet! We’re over the moon to share that we’ve triumphed at the UK Search...

7 min read

Admin : Apr 26, 2019 10:47:58 AM

The landscape of search continues to evolve and change as advancements in technology continue to push forward. Google has recently announced some big innovations that will enhance the way we search. This autumn, Google will start rolling out updates that have a heavy emphasis on visual content and personalisation. These new features will make searching even more accessible than ever before. But how does this affect the SEO world?

Here I will be taking you through some big changes coming that will make extensive searches easier, visual content more meaningful and an overall experience on google that feels more personal and relevant to you. The March Google algorithm update is also worth checking out for those interested in how the update may affect your website.

Vision AI is the laetst image recognition software from Google. It’s able to identify images within an image, as well as more abstract ideas (like ‘metropolitan areas’ etc.)

Read more about it in our post on Google Vision AI, discussing whether this marks the beginning of the end for Alt Text or not.

Have you ever had a topic you want to deeply search about? Maybe you’re planning on an adventurous trip around the country. This is something that one quick search is probably not going to satisfy. In our busy lives, we may not have the luxury of being able to sit down for an hour hitting in search terms.

You may search for a few things whilst you’re having your breakfast before you head off to work. By the time you have returned to Google, you may have somewhat forgotten what you were searching for, and forgot how you found something so specific on Google. You can sniff through your history, but it might become quite tedious to retrace your steps.

This is something Google was aware of, and in response have designed features to remedy this issue to give you a helping hand to find something that interests you. Google’s machine learning will keep track of the content that you found interesting, and serve relevant suggestions of what to find next. These features transform the way Google understands your needs, interests and deeper journeys to help you find the information you need.

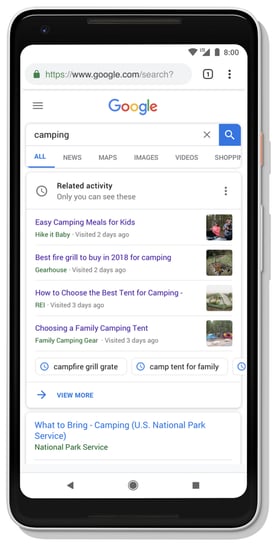

Activity Cards allow you to pick up from where you left off in a neat user-friendly experience. When you revisit a search term you previously searched for in the past, activity cards will appear on your SERP with pages you previously found and terms you already searched for to help you and remind you where you were at before.

It does this when relevant through machine learning and aims to understand when you are revisiting a topic. It is designed to be a small reminder to help you find what you want and it shouldn’t majorly affect the SERP. Users also have full customisation of the feature and only you can see it. You can remove results from your history, pause seeing certain cards or choose to not see the feature at all. Google has said that this will be available later this year.

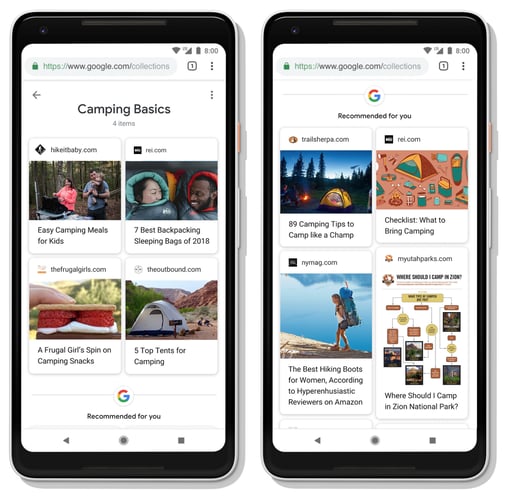

Google has created a very interesting new way to organise content that you’ve seen on the SERP. With collections, content appears as small snippet blocks with thumbnails, known as Activity Cards. Collections act much like YouTube but in written format. YouTube allows you to organise videos you see by adding them to watch later or to playlists. With collections, you can do the same with articles. This is perfect for when you find information that you may want to view later or revisit.

Of course, you can simply add pages to bookmarks the old fashioned way, but this goes beyond that. Not only will you have a page with all of the content organised in tidy thumbnail boxes, it will also start suggesting more content that they recommend based off of your collection. Much like what YouTube does. Google says that this is a feature that will be coming later this fall/autumn.

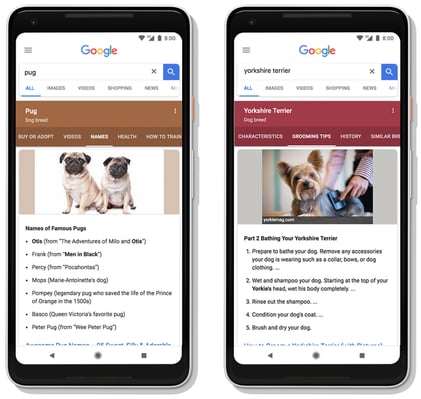

Depending on the topic, search journeys can have layers of depth and subcategories that you can often get lost in. If you don’t know much about the topic, you may not even know what to search for next and may miss important categories of info. Google has recognised this and is introducing a new way to dynamically organise search results.

Google will be doing away with static predetermined categories, and instead, replacing them with dynamic categories that will show categories and topics that are most relevant to what you are searching for. Multiple categories will be presented to you all in one single search in tidy little tabs. With much more organised information available to you, this should give users a very clear indication on what to search for on that topic and should significantly reduce the time searching for things.

This feature will learn and change over time to stay fresh. As new information is published, these tabs will continue to stay up to date to ensure that they are always the most relevant categories to that topic. Dynamic page organisation is live on some categories and they plan to continue to implement this as time goes on.

Google’s already expansive knowledge graph is about to receive an upgrade. The knowledge graph understands connections and relationships between different entities such as people, places, products and facts about them. Now Google has added a new layer to the knowledge graph called ‘Topic Layer’. It’s engineered to deeply understand a topic and how interests can develop over time.

The Topic Layer analyzes all of the content on the web for a given topic and creates thousands of subtopics that are the most relevant and useful. Google then looks at patterns to see how they relate to each so that more content can be suggested to the user who might want to explore further. It ensures that exploring content is easier than ever and suggests things that the user might not even know what they want to search for next. This technology has the potential to provide more opportunities for discovery.

Google over the years has been including more imagery in the search results. Live weather reports, video clips and image thumbnails can be seen in the SERPs. Google is now introducing new features using AI to make the user’s experience more visual and more enjoyable. They are going to make Google Images more useful and meaningful.

Providing news headlines in a visual and engaging way is a philosophy that is adopted by various outlets. The likes of Snapchat and Twitter have been doing this for a while now, and Google is now working on their own. This technology is known as AMP. Google will be using AI to construct AMP stories and surface this in search results. Google is currently experimenting with this feature, currently supporting news on sports and celebrities using AMP technology to provide striking engaging visual content.

Videos can be a very insightful way to learn about a topic. Video tutorials can be a more effective way to learn for some people. Videos of products can entice a user to buy. Videos of locations can be a much better way to get an idea of what the place will be like, over text or image. In Google’s big shift in organising text and image content to provide a more concise search experience for users, videos naturally follow suit in being the next thing to be given the same treatment.

Google is now able to deeply understand the content of a video and can help the user find the most useful content. So for example, if you are planning on a sightseeing trip in London, you might want to look at a few videos for ideas on where to go. Featured videos will take an understanding of the topic and suggest video snippets to further assist you on your search journey.

Many users now use Google Image Search to find information and don’t just want to view an image. It is becoming increasingly common to use google images to see how something looks before looking to click further on to see the core content.

Over the last year, Google overhauled the images algorithm to rank results that have great images and content on the page. The authority of the page plays a big factor in the ranking. If you are looking for shoes, the page behind the image is now more likely to be a website that is related to shoes or sells shoes. The algorithm also considers fresh content that has been updated recently.

Users often will want to know where the image is placed on a page when they actually click through to the site from Google Images. Sometimes it was hard to find where the image is when clicking through to the page. Google is now prioritising sites where the image is central or higher up on the page, in other words, where the image can easily be found on the page.

This is great for when you are looking to buy something from viewing a product image in google images. So if you click on a specific image of a shoe, a page dedicated to that specific shoe will be prioritised over a product range page.

This week Google will be changing the way images look by showing context around the images such as captions that show you the title of where the image was published. You will mostly see thumbnails to articles and product pages, which will give you context to the origin of the image before even needing to click through to the page. Google will also suggest related search terms to help you find more relevant images.

Overall these changes will provide a much better user experience when searching for images.

This is a groundbreaking technology that once perfected, will become a game changer.

I recently spoke about what this technology means for e-commerce, which can be found here.

Google launched last year their own app on this known as Google Lens. This allows users to take photos of things to identify what it is. This ranges from landmarks, animals, products and more. Even more impressively, Lens will allow you to inspect images from google images, and select specific parts of the image to identify what it is.

It really is clever stuff. So for example, if you are looking at a photo of a garden, you might see a little bonsai tree in the corner of the photo, and you may want to know what it is. Lens has the ability to answer that question for you.

Lens scans and analyzes images, and detects objects of interest. If you select a part of the image, it will provide you with information about that object and can even provide product pages where you can buy or continue your search. The potential of this technology is huge and it will be interesting to see how far Google takes this.

In theory, you could take a picture of the Eiffel Tower using Google Lens, and it could relay back a Wikipedia page containing useful information and the history of the landmark. If it understands context, it might understand when you’re looking for transport and taking a picture of a train station or bus stop could provide you with arrival times.

Google says that by dragging your finger on your mobile screen, you can draw on any part of an image to dive even deeper into what that image is about. The depth of this technology really could be something that changes the way we search.

Visual content will be taking the front seat later this year, and as Google continues to evolve, search behaviour will too. Images now align with text-based content, and Google is creating a recipe to tie these entities together so that they complement each other in a way that serves the user in fascinating ways. This major update will define SEO strategies for next year.

For more SEO trends to watch out for in 2019/20, check out what the experts think at Alt Agency.

3 min read

It’s official, we've added not one, but two shiny trophies to our awards cabinet! We’re over the moon to share that we’ve triumphed at the UK Search...

5 min read

Understand ecommerce attribution models which attribution models can maximise your marketing efforts and ROI.

3 min read

Explore how social commerce is changing the way we shop online, blending social interactions with digital commerce for a seamless buying experience.